Automatic recognition saves a considerable amount of time compared to manual input. It takes about 8 hours of very tiring work to process a one-hour media, such as a conference recording, by hand.

AI has made meteoric progress in transcription quality. Until 2022, the reliability of the result was around 75%, requiring manual proofreading and correction. Processing time was relatively long, and the language to be recognized had to be specified.

With the latest version put into production in December 2023, the average error rate has been reduced to 1%! The saving in processing time is just as spectacular, with only one minute to process an hour-long audio or video file. It is no longer necessary to indicate the source language, as it is automatically recognized. Even better: if several languages are spoken in a video, they will all be recognized, without any prior configuration. The gains in simplicity and productivity are considerable.

The automatic generation of subtitles and transcriptions is one of Streamlike’s optional services.

Differences between transcription and subtitles

A transcription by automatic recognition produces a transcript (a text) and subtitles (a structured text with time indications).

Transcription is a metadata of a media that meets a need for accessibility, to retranscribe the audio track of the media to a hearing impaired person, and for textual research or SEO. The transcript is a text. It can be read without playing the media (audio or video).

The subtitles only appear when the media is played, to which they are synchronized. They can be activated to appear on top of the player. One can choose a language among those available or turn all subtitles off.

Automatic translations

Until now, the quickest way was to have an AI translate a subtitle file. As formatting and timecodes are generally ignored, the translated file is directly usable, subject to proofreading and a few corrections. The problem is that these are line-by-line translations, and the meaning of long sentences can be lost. What’s more, a single breakdown is not suitable for all languages, as the number of words can vary considerably from one language to another.

Now, AI can directly generate subtitles in any target language. The meaning of the text is preserved, and the breakdown adapts to the language’s word rate.

Necessary precautions

We still recommend checking transcriptions. Ambient noise, unintelligible words, words with accents or overlapping words can always lead to errors. While brand names are generally well recognized, the spelling of certain proper names or exotic acronyms needs to be checked.

Submitting data to an AI is never trivial. In the case of corporate content, certain statements may be of a confidential nature. The AI used by Streamlike is hosted in France. No data is retained once processing has been completed. Data submitted or produced never circulates outside France, and is not used to train AI models.

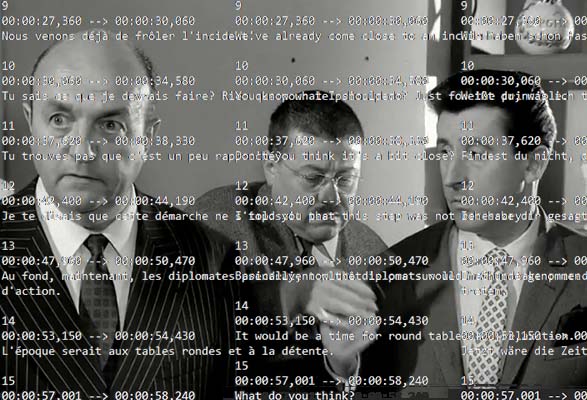

Example of automatic transcription

We took as an example a workshop on “Responsible Streaming”. The video lasts 28 minutes and was transcribed in less than a minute. Proofreading and a few rare corrections using Streamlike’s built-in subtitle editor took less than 5 minutes.